Artificial Intelligence (AI) is a rapidly evolving technology that is transforming the world. From #FakeDrake to #Terminator, AI is changing the way we live, work, and interact with each other. While AI has the potential to revolutionize our lives for the better, its use also poses significant ethical risks that should concern us all.

In this blog post, we will share our findings on the ethical risks of AI in business and why you should care about them. Our article “A survey of AI ethics in business literature: Maps and trends between 2000 and 2021“, published in Frontiers in Psychology, offers a detailed analysis of the literature on AI ethics in business, provides an in-depth analysis of the current state, identifies emerging trends, and discusses the most pressing ethical issues. You can read the full paper here: https://t.co/epUEiEAxki

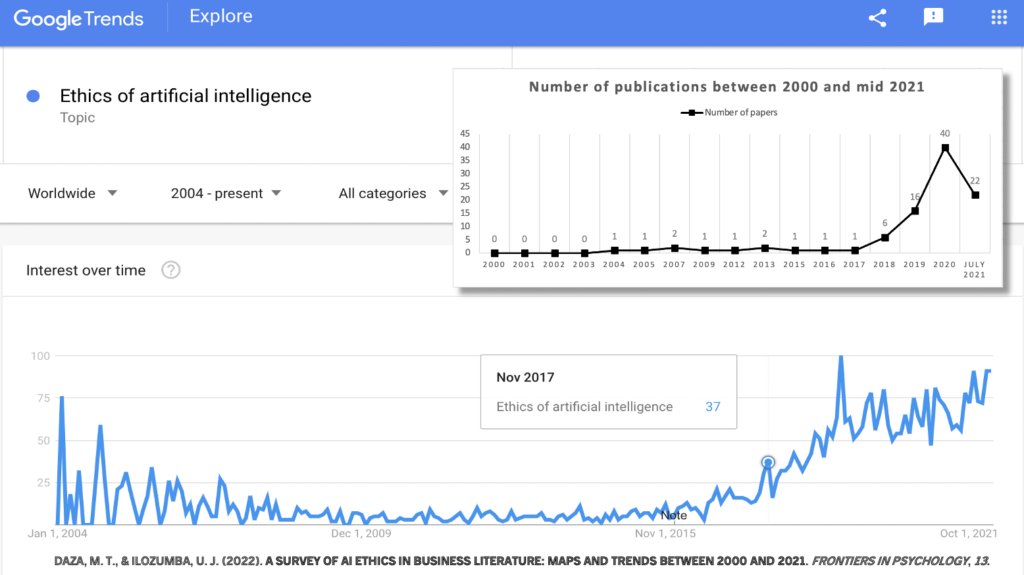

We have observed an increase in the publication of articles on AI ethics since 2018. Notably, there is a dominance of authors affiliated with institutions in the US and EU, while China’s absence is surprising given its government’s manifest interest in taking a leading role in AI development. Further analysis of countries’ budgets suggests that the productivity of scientific publications on this topic may not only depend on funding, but political agendas may also play a role.

Initially, publications addressed moral issues related to technology’s impact on companies, such as the tensions between proprietary and open-source software, the misuse of IT resources, and whether computers can help make better ethical decisions. Later, the concept of AI as a subject was proposed, leading to discussions about the moral agency of corporations or machines and at which level of intelligence it should be granted.

In her 2016 article “Beyond Misclassification: The Digital Transformation of Work,” Miriam Cherry is the first to address the impact of AI on the labor market in a factual, rather than conceptual, way. Analyzing different labor court cases in the on-demand economy, Cherry exposes the harmful effects of crowdwork, including workers’ deskilling and precariousness. This article’s significant influence can be attributed to its presentation of concrete evidence of the potential harm AI could cause in the workplace. This has opened the door for subsequent publications to deal with real issues and situations affecting people.

The incursion of AI into consumer products and services has produced a shift in perception of AI from a tool to an agent that can compete or replace humans. This shift in perception has caused a growing interest in exploring the ethical implications of AI. Consequently, scientific literature has seen an increase in publications that delve into the ethical issues surrounding AI.

Advancements in AI have led to situations where machines are now competing with humans, and automation is replacing workers, sparking fears of job losses. Notable examples include IBM’s Watson defeating human champions on Jeopardy in 2011, Google’s AlphaGo defeating world champion Ke Jie in the game “Go” in 2017, and more recently, a Google engineer being fired in 2022 after claiming that the company’s chatbot, LaMDA, was sentient and demanding legal representation. These examples illustrate the growing capabilities of AI and the ethical questions that arise as machines become more advanced and potentially autonomous.

Five ethical challenges of AI

Our research has identified five ethical challenges that arise from AI and should concern us all. We have turned them into a taxonomy of AI ethics:

- Foundational Issues

- Privacy

- Algorithmic Bias

- Automation and Employment

- Social Media and Public Discourse

Let’s take a closer look at each of these challenges.

Foundational Issues of AI

In 2016, the artificial intelligence program AlphaGo made history by defeating a world champion in the ancient Chinese game of Go with a score of 4:1. However, in 2017, AlphaZero surpassed AlphaGo’s achievement by beating it with a score of 60:40. The key difference between the two programs is that AlphaGo was trained over several years using data from thousands of games played by the best human players, whereas AlphaZero was able to learn by playing against itself, without any human data, and it accomplished this in just 34 hours. This highlights the incredible capability of self-learning AI to acquire skills and knowledge beyond human capacity raising concerns about the potential for autonomous decision-making without human oversight.

This category (Foundational Issues) addresses the issues related to the limits and capabilities of AI, including trustworthiness, accountability, and agency. To understand these issues, it is important to be familiar with the three levels of intelligence that AI can possess, including Artificial Narrow Intelligence (ANI), Artificial General Intelligence (AGI), and Artificial Super Intelligence (ASI).

While ANI currently outperforms human intelligence in specific domains, true AGI and ASI have yet to be achieved. Experts have varying predictions about when AGI and ASI may be possible, with some suggesting it could take decades or even generations. Despite the rapid advancements in generative AI systems, there are still concerns that the values of self-governing entities may not be aligned with those of humanity, potentially creating conflicts and challenges.

Although the idea of sentient robots belongs to science fiction (for now), the deployment of AI systems raises concerns about their potential impact on society. Even if sentient machines do not emerge with a volition of their own, there are other sources of concern related to AI.

In 2021, a Google engineer, Blake Lemoine, sparked media attention with his claim that a chatbot had become sentient. “If I didn’t know exactly what it was, a computer program we recently built, I’d think it was a 7- or 8-year-old who knew physics,” he said after being fired for his arguments about LaMDA, Google’s language model. As language models continue to improve and become indistinguishable from human conversation, there is a risk that people may not realize that they are interacting with a machine rather than a human.

The responsible development and deployment of AI is an important topic, with various proposed principles, guidelines, and frameworks to mitigate possible damages. Furthermore, even if sentient machines do not appear with volition of their own there are other sources of concern related to the deployment of AI.

Privacy and Transparency

The tension between privacy and transparency has become a dilemma for users of digital platforms. Every time we browse the internet or use a smartphone, we generate information about our habits and preferences that is then stored and used to predict or influence our behavior. While companies leverage our data to offer personalized advertising and services, the information collected by today’s systems can potentially fall into the wrong hands, including hackers, unethical organizations, or authoritarian governments.

The Chinese government stands accused of detaining and persecuting Uyghur Muslims, an ethnic minority group based in the Xinjiang region of China. To control and oppress this population, the Chinese government allegedly employs various tactics, including mass surveillance, detention camps, forced labor, and even forced sterilization, with the assistance of AI systems.

However, privacy concerns extend far beyond this. Algorithmic HR decision-making often involves monitoring employees without their knowledge, and companies that use algorithmic pricing, such as insurers or airlines, may have access to personal data that could lead to discrimination. Additionally, the use of AI in judicial interrogation tools raises concerns about violating the legal principle of nemo tenetur se ipsum accusare (no one can be forced to accuse himself).

Additionally, AI-driven devices can classify people based on age, gender, race, or sexual orientation, leading to concerns about discrimination. Researchers from Cambridge University and Microsoft were able to predict sexual orientation with only a few Facebook likes, with an 88% accuracy in men and 75% in women. The ease of obtaining these predictions could raise concerns when considering that there are still eleven countries that criminalize LGBT people and can impose the death penalty.

Algorithmic Bias

AI has become increasingly prevalent in decision-making processes across a wide range of industries. However, the criteria used by machines for decision-making are not always clear and can constitute a black box. In some cases, the information used to make these decisions is protected by business secrecy, and in others, it is impossible or too expensive to isolate the exact factors these algorithms consider. This lack of transparency can lead to the reproduction and amplification of biases, causing serious harm.

The gender bias produced by Google’s AI language translation algorithm in the Turkish language is a clear example of the dangers of algorithmic bias. The algorithm translated a gender-neutral pronoun to describe men as entrepreneurial and women as lazy. Similarly, Tay, Microsoft’s AI-enabled chatbot, learned from screening Twitter feeds and published politically incorrect messages full of misogyny, racism, pro-Nazi, and anti-Semitic content. The machine itself was not racist, but it learned racism from previous human behavior in its training data. These cases highlight the potential harm that AI systems can cause when they reproduce human biases.

AI-system biases have the veneer of objectivity, yet the algorithm created by machine learning can be just as biased and unjust as one written by humans. Worse, given their rapid proliferation in businesses and organizations, AI systems can cause serious harm by reproducing and even amplifying these biases exponentially. For example, the COMPAS software used in some US courts to assess the potential risk of recidivism discriminated against racial minorities, returning scores in which Blacks were almost twice as likely to be labeled as higher risk but not actually re-offend. The damage caused by algorithm discrimination may not be deliberate. However, this does not mean that the company and the developers of the biased technology should not be held accountable. Acknowledging bias has led to calls for algorithms to be “explainable.”

Automation and Employment

The deployment of AI has brought about a paradigm shift in the labor market. AI-driven robots are replacing blue-collar workers in factories, while robotic process automation (RPA) systems are taking over white-collar jobs in offices. AI-based platforms are writing essays, computer code, and creating art.

RPA has rapidly replaced humans in various fields, leading to job losses, albeit in subtle ways. This phenomenon accelerated during the COVID-19 pandemic due to confinement measures. A consequence is that many jobs have been lost, albeit in subtle ways. Although most robots are not physically replacing workers by taking over their desks, many of these job losses are positions that were handled by individuals or those of companies that went bankrupt. For instance, the explosive growth of streaming video platforms like Netflix caused companies like Blockbuster to close; many small bookstores and retailers closed, and some of their jobs were taken over by Amazon’s 200,000 robots.

On the other hand, the On-Demand Economy has created new jobs, but they are often associated with transient and non-linear careers, devaluing work and promoting wages below the legal minimum. Let us review the case of platforms such as Uber, Lyft, Crowdflower, TaskRabbit, and other Gig Economy companies that built their business model by putting people in contact for micro-tasks. This model is also known as “crowdwork,” and contrary to what is happening with robots and RPA, it has fueled the proliferation of new jobs. However, this trend is associated with transient and non-linear careers and has devalued work, promoting wages below the legal minimum and becoming an excuse to avoid paying social security benefits.

Instead of solely focusing on designing machines that imitate human labor, AI can be utilized to augment and amplify human capabilities, leading to more effective and efficient outcomes for both businesses and society as a whole. This approach could help ensure that AI is not seen as a replacement for humans, but rather as a powerful tool that can be used to enhance human performance and productivity.

The impact of AI on the labor market ambivalent implications. Some experts predict that AI will create new jobs and industries, offsetting the jobs it may displace. However, others argue that the speed of this disruption is unprecedented and that unemployment may become a significant problem. Regardless, it is crucial to address the issue of fair distribution of wealth and opportunities, especially for those who may lose their jobs.

Social Media and Public Discourse

Social media platforms sell users’ attention as a product to advertising companies; they use adaptive algorithms to personalize content and ads in endless user feeds. However, excessive use of social media may be associated with several mental health issues such as anxiety and depression, alongside other problems such as the dissemination of fake news, cyberbullying, and harassment.

Moreover, social media platforms are often exploited by hate activists to spread messages that provoke strong emotions against victims, leading to a vicious cycle that generates data for social media companies, creates more publicity for the topic, and attracts others to it. Social media has also been seen as an enabler of terror before, during, and after the 2019 Christchurch terrorist attacks in New Zealand.

The algorithms used by social media platforms can create informational bubbles, polarization, and radicalization, influencing our opinions and decisions. The case of Cambridge Analytica’s role in Brexit and the election of Trump highlights the potential impact of social media on democratic participation. The competition for users’ attention has led to radicalization in societies, with the 2016 US presidential election and Brexit being two significant examples of these platforms being used as weapons.

Can you imagine the potential impact of an army of ChatGPT-style bots attempting to sway your political beliefs?

These five factors are the most relevant we found in the literature. They also constitute our proposal for a taxonomy to classify ethical problems of AI in business (although it can be applied in other areas such as education, public policies, or health).

Ethical Schools of Thought

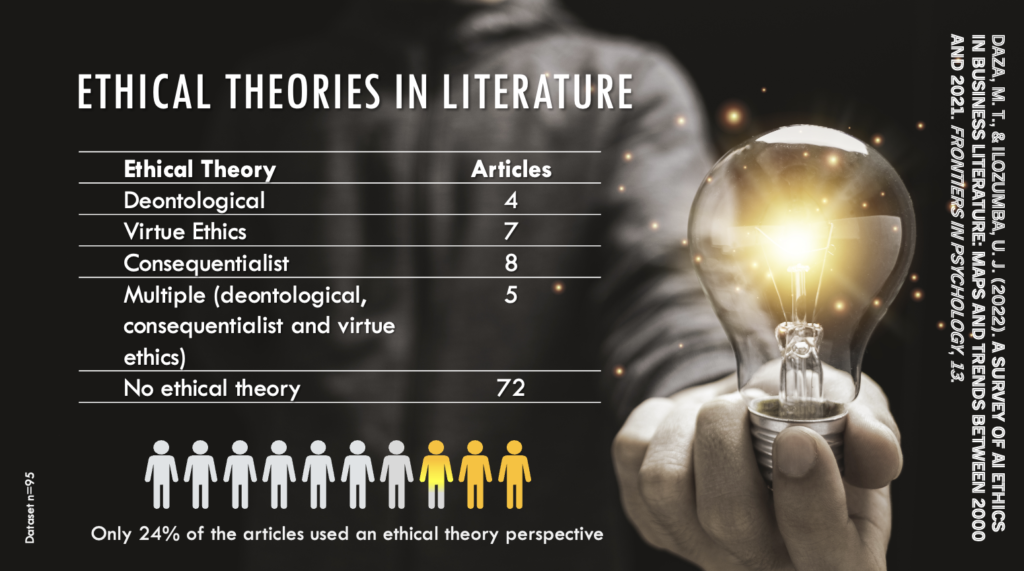

AI carries the potential to cause harm, whether intentionally or unintentionally, posing risks of harm to individuals as well as political, economic, and social stability. Ethical schools of thought can help address these challenges by differentiating what is right from what is wrong. Addressing these challenges requires the application of ethical schools of thought. That’s why we have analyzed the most important schools in the field of AI ethics in business.

The Consequentialist school is the most cited in our review. It holds that the morality of an action is determined by its consequences, and that an action is good if it produces more happiness than any other alternative. Though, it has been criticized for neglecting individual rights.

In second place, Virtue Ethics focuses on character development, rather than whether an action is good or bad. This ethical school focuses on the formation of virtuous habits, in which people act justly and wisely in different situations.

Finally, Deontological ethics offers an alternative that focuses on the moral obligation to follow certain principles or rules, regardless of the consequences. It emphasizes the importance of justice and equity, and the protection of individual rights.

However, only 24% of articles use an ethical theory to support their positions on the ethical problems of AI in business. The rest of the authors only acknowledge that there are ethical problems and that they should be addressed in some way.

Addressing the 5 major ethical challenges of AI is crucial, and it’s important to approach them from the perspective of one or several schools of ethical thought. Ethical evaluation can help us differentiate between right and wrong and make clear decisions in ambiguous situations.

For example, is it ethical to release an autonomous driving AI to the market that can reduce traffic accidents by 80%? While reducing 80% of accidents could save thousands of lives, there is also a 20% risk of AI failures causing accidents. Who takes responsibility for the lives lost in that 20%? These are the difficult ethical questions that we must confront when developing AI technologies.

To ensure the responsible development and deployment of AI, we must consider the ethical implications and trade-offs involved. Different ethical frameworks offer valuable guidance for making sound ethical decisions in AI development and use. It is necessary to adopt a holistic approach to ensure that the benefits of AI are maximized while minimizing its potential harm.

Read our full paper “A survey of AI ethics in business literature: Maps and trends between 2000 and 2021” here: https://t.co/epUEiEAxki

Daza, M. T., & Ilozumba, U. J. (2022). A survey of AI ethics in Business Literature: Maps and trends between 2000 and 2021. Frontiers in Psychology, 13. https://doi.org/10.3389/fpsyg.2022.1042661