A research article (preprint) by: Alejo Jose G. Sison, Marco Tulio Daza, Roberto Gozalo-Brizuela and Eduardo C. Garrido-Merchan.

ChatGPT is a chatbot that can generate coherent and diverse texts on almost any topic. Since its rollout in November 2022, a host of ethical issues has arisen from the use of ChatGPT: bias, privacy, misinformation, and job displacement, among others.

In our latest research paper “ChatGPT: More than a “Weapon of Mass Deception” Ethical challenges and responses from the Human-Centered Artificial Intelligence (HCAI) perspective,” (preprint available here), we explore some of the ethical issues that arise from using chatGPT and suggest responses based on the Human-Centered Artificial Intelligence (HCAI) framework with a view to enhancing human flourishing.

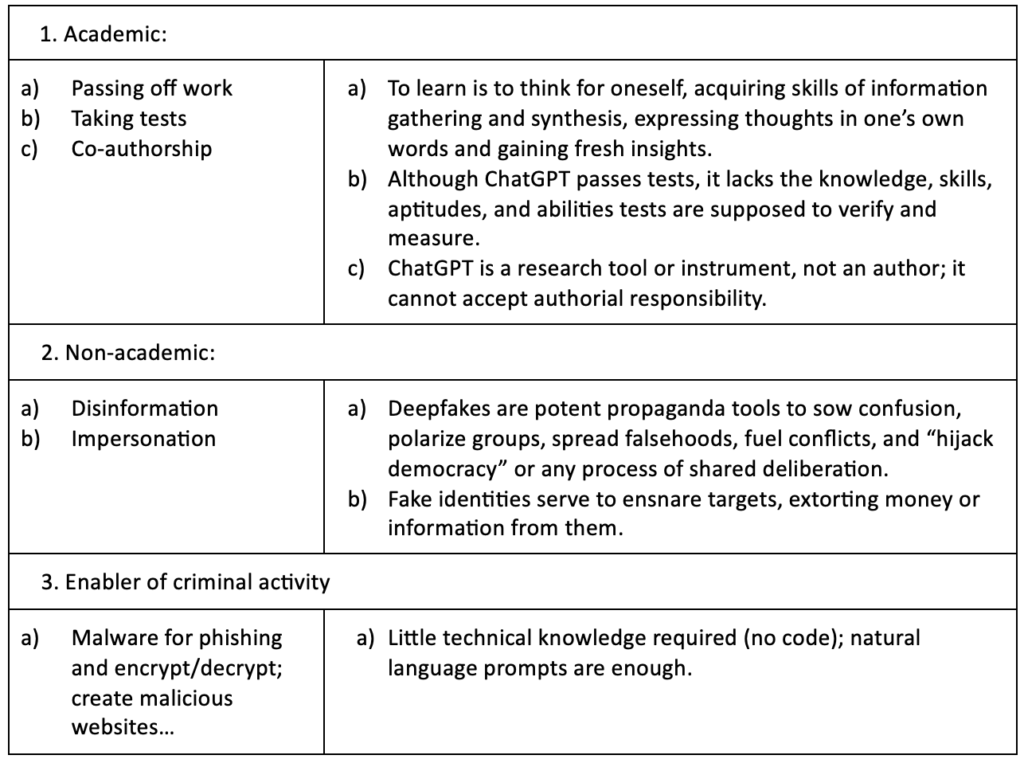

We have identified the greatest risk associated with ChatGPT as its potential for misuse in generating and spreading misinformation and disinformation, as well as enabling criminal activities that rely on deception. This makes ChatGPT a potential “weapon of mass deception” (WMD). The following table classifies the ethical risks we have found into three categories.

In our paper, we address these ethical challenges related to ChatGPT by employing both technical and non-technical approaches. However, it’s important to acknowledge that no combination of solutions can eliminate the risk of deception and other malicious activities involving ChatGPT. Therefore, we focus on reducing harm.

It is important to note that ChatGPT cannot intentionally produce harm, as it lacks the ability to have intentions. Rather, it simply predicts the next word based on statistical correlations in the training data and the prompt, functioning as an advanced autocomplete function that does not consider or distinguish between truth and falsehood.

As such, the technology itself is neutral, and it is the responsibility of the user to accept legal, scientific, moral, and social responsibility for their publications or consent to terms of use and distribution. Scientists do not attribute co-authorial rights to instruments or software, so it is misleading to list ChatGPT as a co-author, as this implies false and unscientific claims and attributions (Marcus, 2023). Any attribution of authorship carries with it accountability for the work, which AI tools cannot take responsibility for. Researchers who use LLM tools should document their use in the methods or acknowledgment sections, as recommended by (‘The AI Writing on the Wall’, 2023).

The following list presents our proposal to mitigate the potential risks of chat GPT being misused to produce disinformation and deception.

- Technical resources:

- Statistical watermarking

- Identifying AI styleme

- ChatGPT detectors: GPTZeroX, DetectGPT, OpenAI Classifier

- Fact-checking websites

- Non-technical resources:

- Enforce terms of use, content moderation, safety & overall best practices

- Transparency about what ChatGPT can and cannot (or should not) do

- Educator considerations: age, educational level, and domain-appropriate use under supervision

- “Humans in the loop” (HITL) & knowledge of principles of human-AI interaction

An HCAI assessment

Additionally, we made an assessment using the Six Human-Centered AI challenges framework proposed by Ozmen Garibay et al. (2023) to create AI technologies that are human-centered, that is, ethical, fair, and enhance the human condition. In essence, these challenges advocate for a human-centered approach to AI that (1) is centered in human well-being, (2) is designed responsibly, (3) respects privacy, (4) follows human-centered design principles, (5) is subject to appropriate governance and oversight, and (6) interacts with individuals while respecting human’s cognitive capacities. Our findings show that chatGPT developers have failed to address four.

- First, chatGPT falls short in responsible design by failing to determine who is responsible for what, clarifying both legal and ethical liabilities in the user interface.

- Second, ChatGPT lacks respect for privacy, which includes various rights such as being left alone, controlling personal information, and protecting intimacy. ChatGPT’s conversations can unintentionally infringe on these rights, increasing the risk of causing psychological harm.

- Thirdly, it does not follow human-centered design principles by failing to calibrate risks properly and focusing more on maximizing AI objective functions than on human and societal well-being.

- Lastly, ChatGPT has not subjected itself to appropriate governance and oversight, failing to consider current and prospective regulatory standards and certifications.

To make the best use of ChatGPT, developers should prioritize human development and well-being by moving beyond usability to consider emotions, beliefs, preferences, and physical and psychological responses, effectively augmenting and enhancing the user experience while preserving dignity and agency.

Best uses for chatGPT

Finally, we have identified the best uses for ChatGPT, including creative writing such as brainstorming, idea generation, and text style transformation. Additionally, non-creative writing applications like spelling and grammar checks, summarization, copywriting and copy editing, and coding assistance can also benefit from ChatGPT. Finally, ChatGPT can be useful in teaching and learning contexts such as preparing lesson plans and scripts, as a critical thinking tool for assessment and evaluation, and as a language tutor and writing quality benchmark.

In conclusion, chatGPT is a powerful but perilous tool that should be used with care and responsibility. We urge users to be aware of the risks and limitations of chatGPT and similar chatbots; and developers and regulators to establish ethical standards and guidelines for the design and use of chatGPT and similar chatbots, and to ensure that they respect human dignity, privacy, and security.

The manuscript “ChatGPT: More than a “Weapon of Mass Deception” Ethical challenges and responses from the Human-Centered Artificial Intelligence (HCAI) perspective” is under review by the International Journal of Human-Computer Interaction. The preprint is available here: https://bit.ly/3MYLSGA.

References

- Marcus, G. (2023, January 14). Scientists, please don’t let your chatbots grow up to be co-authors. The Road to AI We Can Trust. https://garymarcus.substack.com/p/scientists-please-dont-let-your-chatbots

- Ozmen Garibay, O., Winslow, B., Andolina, S., Antona, M., Bodenschatz, A., Coursaris, C., Falco, G., Fiore, S. M., Garibay, I., Grieman, K., Havens, J. C., Jirotka, M., Kacorri, H., Karwowski, W., Kider, J., Konstan, J., Koon, S., Lopez-Gonzalez, M., Maifeld-Carucci, I., … Xu, W. (2023). Six Human-Centered Artificial Intelligence Grand Challenges. International Journal of Human-Computer Interaction, 39(3), 391–437. https://doi.org/10.1080/10447318.2022.2153320

- The AI writing on the wall. (2023, January 20). Nature Machine Intelligence 2023 5:1, 5(1), 1–1. https://doi.org/10.1038/s42256-023-00613-9